Implementing Natural Language Processing in Python, ML.NET, Apache Spark and Azure Cognitive Services

Say hello to my co-writer today, Atticus Jack

This article is a continuation of a series in which I explore different implementations of machine learning tasks across different programming ecosystems. Today, I'm going over ML.NET, Python, Apache Spark, and Azure Cognitive Services. The task is natural language processing - and specifically sentiment analysis. While I cover Python and C# ecosystems, Atticus will be diving into the Java implementation with Apache Spark.

What is Natural Language Processing?

Natural language processing at its core is simply a method for a computer to understand human language. We've been doing this in chat bots for ages using if/then trees and switches. However, in recent years natural language processing has become more sophisticated. Some natural language processing uses statistical analysis on phrases, while other use neural networks to analyze and process words and sentences.

Natural language processing has come a long way and it's used everywhere from predective text to speech to text not to mention the newer more sophisticated chat bots that exist now.

But the ecosystems that exist to create a natural language process vary quite a lot.

We'll be providing commentary and opinions along the way as well, just to warn you.

Explaining the data

The data very simple. It's a tab separated file with reviews as a column and a column to record the tone of the text, whether it's 0 for negative or 1 for positive. This is the standard set of data to play with for sentiment analysis, which is what we're going to do.

The Azure Cognitive Services does not use this data set, although it certainly could start making predictions right away. The reason we don't is that after a certain number of predictions Azure will start to accrue charges. I opted to stay well within the boundaries of the free tier.

Take a look at the data:

| Review Liked | |

| Wow... Loved this place. 1 | |

| Crust is not good. 0 | |

| Not tasty and the texture was just nasty. 0 | |

| Stopped by during the late May bank holiday off Rick Steve recommendation and loved it. 1 | |

| The selection on the menu was great and so were the prices. 1 | |

| Now I am getting angry and I want my damn pho. 0 | |

| Honeslty it didn't taste THAT fresh.) 0 | |

| The potatoes were like rubber and you could tell they had been made up ahead of time being kept under a warmer. 0 | |

| The fries were great too. 1 | |

| A great touch. 1 | |

| Service was very prompt. 1 | |

| Would not go back. 0 | |

| The cashier had no care what so ever on what I had to say it still ended up being wayyy overpriced. 0 | |

| I tried the Cape Cod ravoli, chicken, with cranberry...mmmm! 1 | |

| I was disgusted because I was pretty sure that was human hair. 0 | |

| I was shocked because no signs indicate cash only. 0 | |

| Highly recommended. 1 | |

| Waitress was a little slow in service. 0 | |

| This place is not worth your time, let alone Vegas. 0 | |

| did not like at all. 0 | |

| The Burrittos Blah! 0 | |

| The food, amazing. 1 | |

| Service is also cute. 1 | |

| I could care less... The interior is just beautiful. 1 | |

| So they performed. 1 | |

| That's right....the red velvet cake.....ohhh this stuff is so good. 1 | |

| - They never brought a salad we asked for. 0 | |

| This hole in the wall has great Mexican street tacos, and friendly staff. 1 | |

| Took an hour to get our food only 4 tables in restaurant my food was Luke warm, Our sever was running around like he was totally overwhelmed. 0 | |

| The worst was the salmon sashimi. 0 | |

| Also there are combos like a burger, fries, and beer for 23 which is a decent deal. 1 | |

| This was like the final blow! 0 | |

| I found this place by accident and I could not be happier. 1 | |

| seems like a good quick place to grab a bite of some familiar pub food, but do yourself a favor and look elsewhere. 0 | |

| Overall, I like this place a lot. 1 | |

| The only redeeming quality of the restaurant was that it was very inexpensive. 1 | |

| Ample portions and good prices. 1 | |

| Poor service, the waiter made me feel like I was stupid every time he came to the table. 0 | |

| My first visit to Hiro was a delight! 1 | |

| Service sucks. 0 | |

| The shrimp tender and moist. 1 | |

| There is not a deal good enough that would drag me into that establishment again. 0 | |

| Hard to judge whether these sides were good because we were grossed out by the melted styrofoam and didn't want to eat it for fear of getting sick. 0 | |

| On a positive note, our server was very attentive and provided great service. 1 | |

| Frozen pucks of disgust, with some of the worst people behind the register. 0 | |

| The only thing I did like was the prime rib and dessert section. 1 | |

| It's too bad the food is so damn generic. 0 | |

| The burger is good beef, cooked just right. 1 | |

| If you want a sandwich just go to any Firehouse!!!!! 1 | |

| My side Greek salad with the Greek dressing was so tasty, and the pita and hummus was very refreshing. 1 | |

| We ordered the duck rare and it was pink and tender on the inside with a nice char on the outside. 1 | |

| He came running after us when he realized my husband had left his sunglasses on the table. 1 | |

| Their chow mein is so good! 1 | |

| They have horrible attitudes towards customers, and talk down to each one when customers don't enjoy their food. 0 | |

| The portion was huge! 1 | |

| Loved it...friendly servers, great food, wonderful and imaginative menu. 1 | |

| The Heart Attack Grill in downtown Vegas is an absolutely flat-lined excuse for a restaurant. 0 | |

| Not much seafood and like 5 strings of pasta at the bottom. 0 | |

| The salad had just the right amount of sauce to not over power the scallop, which was perfectly cooked. 1 | |

| The ripped banana was not only ripped, but petrified and tasteless. 0 | |

| At least think to refill my water before I struggle to wave you over for 10 minutes. 0 | |

| This place receives stars for their APPETIZERS!!! 1 | |

| The cocktails are all handmade and delicious. 1 | |

| We'd definitely go back here again. 1 | |

| We are so glad we found this place. 1 | |

| Great food and service, huge portions and they give a military discount. 1 | |

| Always a great time at Dos Gringos! 1 | |

| Update.....went back for a second time and it was still just as amazing 1 | |

| We got the food and apparently they have never heard of salt and the batter on the fish was chewy. 0 | |

| A great way to finish a great. 1 | |

| The deal included 5 tastings and 2 drinks, and Jeff went above and beyond what we expected. 1 | |

| - Really, really good rice, all the time. 1 | |

| The service was meh. 0 | |

| It took over 30 min to get their milkshake, which was nothing more than chocolate milk. 0 | |

| I guess I should have known that this place would suck, because it is inside of the Excalibur, but I didn't use my common sense. 0 | |

| The scallop dish is quite appalling for value as well. 0 | |

| 2 times - Very Bad Customer Service ! 0 | |

| The sweet potato fries were very good and seasoned well. 1 | |

| Today is the second time I've been to their lunch buffet and it was pretty good. 1 | |

| There is so much good food in Vegas that I feel cheated for wasting an eating opportunity by going to Rice and Company. 0 | |

| Coming here is like experiencing an underwhelming relationship where both parties can't wait for the other person to ask to break up. 0 | |

| walked in and the place smelled like an old grease trap and only 2 others there eating. 0 | |

| The turkey and roast beef were bland. 0 | |

| This place has it! 1 | |

| The pan cakes everyone are raving about taste like a sugary disaster tailored to the palate of a six year old. 0 | |

| I love the Pho and the spring rolls oh so yummy you have to try. 1 | |

| The poor batter to meat ratio made the chicken tenders very unsatisfying. 0 | |

| All I have to say is the food was amazing!!! 1 | |

| Omelets are to die for! 1 | |

| Everything was fresh and delicious! 1 | |

| In summary, this was a largely disappointing dining experience. 0 | |

| It's like a really sexy party in your mouth, where you're outrageously flirting with the hottest person at the party. 1 | |

| Never been to Hard Rock Casino before, WILL NEVER EVER STEP FORWARD IN IT AGAIN! 0 | |

| Best breakfast buffet!!! 1 | |

| say bye bye to your tip lady! 0 | |

| We'll never go again. 0 | |

| Will be back again! 1 | |

| Food arrived quickly! 1 | |

| It was not good. 0 | |

| On the up side, their cafe serves really good food. 1 | |

| Our server was fantastic and when he found out the wife loves roasted garlic and bone marrow, he added extra to our meal and another marrow to go! 1 | |

| The only good thing was our waiter, he was very helpful and kept the bloddy mary's coming. 1 | |

| Best Buffet in town, for the price you cannot beat it. 1 | |

| I LOVED their mussels cooked in this wine reduction, the duck was tender, and their potato dishes were delicious. 1 | |

| This is one of the better buffets that I have been to. 1 | |

| So we went to Tigerlilly and had a fantastic afternoon! 1 | |

| The food was delicious, our bartender was attentive and personable AND we got a great deal! 1 | |

| The ambience is wonderful and there is music playing. 1 | |

| Will go back next trip out. 1 | |

| Sooooo good!! 1 | |

| REAL sushi lovers, let's be honest - Yama is not that good. 0 | |

| At least 40min passed in between us ordering and the food arriving, and it wasn't that busy. 0 | |

| This is a really fantastic Thai restaurant which is definitely worth a visit. 1 | |

| Nice, spicy and tender. 1 | |

| Good prices. 1 | |

| Check it out. 1 | |

| It was pretty gross! 0 | |

| I've had better atmosphere. 0 | |

| Kind of hard to mess up a steak but they did. 0 | |

| Although I very much liked the look and sound of this place, the actual experience was a bit disappointing. 0 | |

| I just don't know how this place managed to served the blandest food I have ever eaten when they are preparing Indian cuisine. 0 | |

| Worst service to boot, but that is the least of their worries. 0 | |

| Service was fine and the waitress was friendly. 1 | |

| The guys all had steaks, and our steak loving son who has had steak at the best and worst places said it was the best steak he's ever eaten. 1 | |

| We thought you'd have to venture further away to get good sushi, but this place really hit the spot that night. 1 | |

| Host staff were, for lack of a better word, BITCHES! 0 | |

| Bland... Not a liking this place for a number of reasons and I don't want to waste time on bad reviewing.. I'll leave it at that... 0 | |

| Phenomenal food, service and ambiance. 1 | |

| I wouldn't return. 0 | |

| Definitely worth venturing off the strip for the pork belly, will return next time I'm in Vegas. 1 | |

| This place is way too overpriced for mediocre food. 0 | |

| Penne vodka excellent! 1 | |

| They have a good selection of food including a massive meatloaf sandwich, a crispy chicken wrap, a delish tuna melt and some tasty burgers. 1 | |

| The management is rude. 0 | |

| Delicious NYC bagels, good selections of cream cheese, real Lox with capers even. 1 | |

| Great Subway, in fact it's so good when you come here every other Subway will not meet your expectations. 1 | |

| I had a seriously solid breakfast here. 1 | |

| This is one of the best bars with food in Vegas. 1 | |

| He was extremely rude and really, there are so many other restaurants I would love to dine at during a weekend in Vegas. 0 | |

| My drink was never empty and he made some really great menu suggestions. 1 | |

| Don't do it!!!! 0 | |

| The waiter wasn't helpful or friendly and rarely checked on us. 0 | |

| My husband and I ate lunch here and were very disappointed with the food and service. 0 | |

| And the red curry had so much bamboo shoots and wasn't very tasty to me. 0 | |

| Nice blanket of moz over top but i feel like this was done to cover up the subpar food. 1 | |

| The bathrooms are clean and the place itself is well decorated. 1 | |

| The menu is always changing, food quality is going down & service is extremely slow. 0 | |

| The service was a little slow , considering that were served by 3 people servers so the food was coming in a slow pace. 0 | |

| I give it 2 thumbs down 0 | |

| We watched our waiter pay a lot more attention to other tables and ignore us. 0 | |

| My fiancé and I came in the middle of the day and we were greeted and seated right away. 1 | |

| This is a great restaurant at the Mandalay Bay. 1 | |

| We waited for forty five minutes in vain. 0 | |

| Crostini that came with the salad was stale. 0 | |

| Some highlights : Great quality nigiri here! 1 | |

| the staff is friendly and the joint is always clean. 1 | |

| this was a different cut than the piece the other day but still wonderful and tender s well as well flavored. 1 | |

| I ordered the Voodoo pasta and it was the first time I'd had really excellent pasta since going gluten free several years ago. 1 | |

| this place is good. 1 | |

| Unfortunately, we must have hit the bakery on leftover day because everything we ordered was STALE. 0 | |

| I came back today since they relocated and still not impressed. 0 | |

| I was seated immediately. 1 | |

| Their menu is diverse, and reasonably priced. 1 | |

| Avoid at all cost! 0 | |

| Restaurant is always full but never a wait. 1 | |

| DELICIOUS!! 1 | |

| This place is hands-down one of the best places to eat in the Phoenix metro area. 1 | |

| So don't go there if you are looking for good food... 0 | |

| I've never been treated so bad. 0 | |

| Bacon is hella salty. 1 | |

| We also ordered the spinach and avocado salad, the ingredients were sad and the dressing literally had zero taste. 0 | |

| This really is how Vegas fine dining used to be, right down to the menus handed to the ladies that have no prices listed. 1 | |

| The waitresses are very friendly. 1 | |

| Lordy, the Khao Soi is a dish that is not to be missed for curry lovers! 1 | |

| Everything on the menu is terrific and we were also thrilled that they made amazing accommodations for our vegetarian daughter. 1 | |

| Perhaps I caught them on an off night judging by the other reviews, but I'm not inspired to go back. 0 | |

| The service here leaves a lot to be desired. 0 | |

| The atmosphere is modern and hip, while maintaining a touch of coziness. 1 | |

| Not a weekly haunt, but definitely a place to come back to every once in a while. 1 | |

| We literally sat there for 20 minutes with no one asking to take our order. 0 | |

| The burger had absolutely no flavor - the meat itself was totally bland, the burger was overcooked and there was no charcoal flavor. 0 | |

| I also decided not to send it back because our waitress looked like she was on the verge of having a heart attack. 0 | |

| I dressed up to be treated so rudely! 0 | |

| It was probably dirt. 0 | |

| Love this place, hits the spot when I want something healthy but not lacking in quantity or flavor. 1 | |

| I ordered the Lemon raspberry ice cocktail which was also incredible. 1 | |

| The food sucked, which we expected but it sucked more than we could have imagined. 0 | |

| Interesting decor. 1 | |

| What I really like there is the crepe station. 1 | |

| Also were served hot bread and butter, and home made potato chips with bacon bits on top....very original and very good. 1 | |

| you can watch them preparing the delicious food!) 1 | |

| Both of the egg rolls were fantastic. 1 | |

| When my order arrived, one of the gyros was missing. 0 | |

| I had a salad with the wings, and some ice cream for dessert and left feeling quite satisfied. 1 | |

| I'm not really sure how Joey's was voted best hot dog in the Valley by readers of Phoenix Magazine. 0 | |

| The best place to go for a tasty bowl of Pho! 1 | |

| The live music on Fridays totally blows. 0 | |

| I've never been more insulted or felt disrespected. 0 | |

| Very friendly staff. 1 | |

| It is worth the drive. 1 | |

| I had heard good things about this place, but it exceeding every hope I could have dreamed of. 1 | |

| Food was great and so was the serivce! 1 | |

| The warm beer didn't help. 0 | |

| Great brunch spot. 1 | |

| Service is friendly and inviting. 1 | |

| Very good lunch spot. 1 | |

| I've lived here since 1979 and this was the first (and last) time I've stepped foot into this place. 0 | |

| The WORST EXPERIENCE EVER. 0 | |

| Must have been an off night at this place. 0 | |

| The sides are delish - mixed mushrooms, yukon gold puree, white corn - beateous. 1 | |

| If that bug never showed up I would have given a 4 for sure, but on the other side of the wall where this bug was climbing was the kitchen. 0 | |

| For about 10 minutes, we we're waiting for her salad when we realized that it wasn't coming any time soon. 0 | |

| My friend loved the salmon tartar. 1 | |

| Won't go back. 0 | |

| Extremely Tasty! 1 | |

| Waitress was good though! 1 | |

| Soggy and not good. 0 | |

| The Jamaican mojitos are delicious. 1 | |

| Which are small and not worth the price. 0 | |

| - the food is rich so order accordingly. 1 | |

| The shower area is outside so you can only rinse, not take a full shower, unless you don't mind being nude for everyone to see! 0 | |

| The service was a bit lacking. 0 | |

| Lobster Bisque, Bussell Sprouts, Risotto, Filet ALL needed salt and pepper..and of course there is none at the tables. 0 | |

| Hopefully this bodes for them going out of business and someone who can cook can come in. 0 | |

| It was either too cold, not enough flavor or just bad. 0 | |

| I loved the bacon wrapped dates. 1 | |

| This is an unbelievable BARGAIN! 1 | |

| The folks at Otto always make us feel so welcome and special. 1 | |

| As for the "mains," also uninspired. 0 | |

| This is the place where I first had pho and it was amazing!! 1 | |

| This wonderful experience made this place a must-stop whenever we are in town again. 1 | |

| If the food isn't bad enough for you, then enjoy dealing with the world's worst/annoying drunk people. 0 | |

| Very very fun chef. 1 | |

| Ordered a double cheeseburger & got a single patty that was falling apart (picture uploaded) Yeah, still sucks. 0 | |

| Great place to have a couple drinks and watch any and all sporting events as the walls are covered with TV's. 1 | |

| If it were possible to give them zero stars, they'd have it. 0 | |

| The descriptions said "yum yum sauce" and another said "eel sauce", yet another said "spicy mayo"...well NONE of the rolls had sauces on them. 0 | |

| I'd say that would be the hardest decision... Honestly, all of M's dishes taste how they are supposed to taste (amazing). 1 | |

| If she had not rolled the eyes we may have stayed... Not sure if we will go back and try it again. 0 | |

| Everyone is very attentive, providing excellent customer service. 1 | |

| Horrible - don't waste your time and money. 0 | |

| Now this dish was quite flavourful. 1 | |

| By this time our side of the restaurant was almost empty so there was no excuse. 0 | |

| (It wasn't busy either) Also, the building was FREEZING cold. 0 | |

| like the other reviewer said "you couldn't pay me to eat at this place again." 0 | |

| -Drinks took close to 30 minutes to come out at one point. 0 | |

| Seriously flavorful delights, folks. 1 | |

| Much better than the other AYCE sushi place I went to in Vegas. 1 | |

| The lighting is just dark enough to set the mood. 1 | |

| Based on the sub-par service I received and no effort to show their gratitude for my business I won't be going back. 0 | |

| Owner's are really great people.! 1 | |

| There is nothing privileged about working/eating there. 0 | |

| The Greek dressing was very creamy and flavorful. 1 | |

| Overall, I don't think that I would take my parents to this place again because they made most of the similar complaints that I silently felt too. 0 | |

| Now the pizza itself was good the peanut sauce was very tasty. 1 | |

| We had 7 at our table and the service was pretty fast. 1 | |

| Fantastic service here. 1 | |

| I as well would've given godfathers zero stars if possible. 0 | |

| They know how to make them here. 1 | |

| very tough and very short on flavor! 0 | |

| I hope this place sticks around. 1 | |

| I have been in more than a few bars in Vegas, and do not ever recall being charged for tap water. 0 | |

| The restaurant atmosphere was exquisite. 1 | |

| Good service, very clean, and inexpensive, to boot! 1 | |

| The seafood was fresh and generous in portion. 1 | |

| Plus, it's only 8 bucks. 1 | |

| The service was not up to par, either. 0 | |

| Thus far, have only visited twice and the food was absolutely delicious each time. 1 | |

| Just as good as when I had it more than a year ago! 1 | |

| For a self proclaimed coffee cafe, I was wildly disappointed. 0 | |

| The Veggitarian platter is out of this world! 1 | |

| You cant go wrong with any of the food here. 1 | |

| You can't beat that. 1 | |

| Stopped by this place while in Madison for the Ironman, very friendly, kind staff. 1 | |

| The chefs were friendly and did a good job. 1 | |

| I've had better, not only from dedicated boba tea spots, but even from Jenni Pho. 0 | |

| I liked the patio and the service was outstanding. 1 | |

| The goat taco didn't skimp on the meat and wow what FLAVOR! 1 | |

| I think not again 0 | |

| I had the mac salad and it was pretty bland so I will not be getting that again. 0 | |

| I went to Bachi Burger on a friend's recommendation and was not disappointed. 1 | |

| Service stinks here! 0 | |

| I waited and waited. 0 | |

| This place is not quality sushi, it is not a quality restaurant. 0 | |

| I would definitely recommend the wings as well as the pizza. 1 | |

| Great Pizza and Salads! 1 | |

| Things that went wrong: - They burned the saganaki. 0 | |

| We waited an hour for what was a breakfast I could have done 100 times better at home. 0 | |

| This place is amazing! 1 | |

| I hate to disagree with my fellow Yelpers, but my husband and I were so disappointed with this place. 0 | |

| Waited 2 hours & never got either of our pizzas as many other around us who came in later did! 0 | |

| Just don't know why they were so slow. 0 | |

| The staff is great, the food is delish, and they have an incredible beer selection. 1 | |

| I live in the neighborhood so I am disappointed I won't be back here, because it is a convenient location. 0 | |

| I didn't know pulled pork could be soooo delicious. 1 | |

| You get incredibly fresh fish, prepared with care. 1 | |

| Before I go in to why I gave a 1 star rating please know that this was my third time eating at Bachi burger before writing a review. 0 | |

| I love the fact that everything on their menu is worth it. 1 | |

| Never again will I be dining at this place! 0 | |

| The food was excellent and service was very good. 1 | |

| Good beer & drink selection and good food selection. 1 | |

| Please stay away from the shrimp stir fried noodles. 0 | |

| The potato chip order was sad... I could probably count how many chips were in that box and it was probably around 12. 0 | |

| Food was really boring. 0 | |

| Good Service-check! 1 | |

| This greedy corporation will NEVER see another dime from me! 0 | |

| Will never, ever go back. 0 | |

| As much as I'd like to go back, I can't get passed the atrocious service and will never return. 0 | |

| In the summer, you can dine in a charming outdoor patio - so very delightful. 1 | |

| I did not expect this to be so good! 1 | |

| Fantastic food! 1 | |

| She ordered a toasted English muffin that came out untoasted. 0 | |

| The food was very good. 1 | |

| Never going back. 0 | |

| Great food for the price, which is very high quality and house made. 1 | |

| The bus boy on the other hand was so rude. 0 | |

| By this point, my friends and I had basically figured out this place was a joke and didn't mind making it publicly and loudly known. 0 | |

| Back to good BBQ, lighter fare, reasonable pricing and tell the public they are back to the old ways. 1 | |

| And considering the two of us left there very full and happy for about $20, you just can't go wrong. 1 | |

| All the bread is made in-house! 1 | |

| The only downside is the service. 0 | |

| Also, the fries are without a doubt the worst fries I've ever had. 0 | |

| Service was exceptional and food was a good as all the reviews. 1 | |

| A couple of months later, I returned and had an amazing meal. 1 | |

| Favorite place in town for shawarrrrrrma!!!!!! 1 | |

| The black eyed peas and sweet potatoes... UNREAL! 1 | |

| You won't be disappointed. 1 | |

| They could serve it with just the vinaigrette and it may make for a better overall dish, but it was still very good. 1 | |

| I go to far too many places and I've never seen any restaurant that serves a 1 egg breakfast, especially for $4.00. 0 | |

| When my mom and I got home she immediately got sick and she only had a few bites of salad. 0 | |

| The servers are not pleasant to deal with and they don't always honor Pizza Hut coupons. 0 | |

| Both of them were truly unbelievably good, and I am so glad we went back. 1 | |

| We had fantastic service, and were pleased by the atmosphere. 1 | |

| Everything was gross. 0 | |

| I love this place. 1 | |

| Great service and food. 1 | |

| First - the bathrooms at this location were dirty- Seat covers were not replenished & just plain yucky!!! 0 | |

| The burger... I got the "Gold Standard" a $17 burger and was kind of disappointed. 0 | |

| OMG, the food was delicioso! 1 | |

| There is nothing authentic about this place. 0 | |

| the spaghetti is nothing special whatsoever. 0 | |

| Of all the dishes, the salmon was the best, but all were great. 1 | |

| The vegetables are so fresh and the sauce feels like authentic Thai. 1 | |

| It's worth driving up from Tucson! 1 | |

| The selection was probably the worst I've seen in Vegas.....there was none. 0 | |

| Pretty good beer selection too. 1 | |

| This place is like Chipotle, but BETTER. 1 | |

| Classy/warm atmosphere, fun and fresh appetizers, succulent steaks (Baseball steak!!!!! 1 | |

| 5 stars for the brick oven bread app! 1 | |

| I have eaten here multiple times, and each time the food was delicious. 1 | |

| We sat another ten minutes and finally gave up and left. 0 | |

| He was terrible! 0 | |

| Everyone is treated equally special. 1 | |

| It shouldn't take 30 min for pancakes and eggs. 0 | |

| It was delicious!!! 1 | |

| On the good side, the staff was genuinely pleasant and enthusiastic - a real treat. 1 | |

| Sadly, Gordon Ramsey's Steak is a place we shall sharply avoid during our next trip to Vegas. 0 | |

| As always the evening was wonderful and the food delicious! 1 | |

| Best fish I've ever had in my life! 1 | |

| (The bathroom is just next door and very nice.) 1 | |

| The buffet is small and all the food they offered was BLAND. 0 | |

| This is an Outstanding little restaurant with some of the Best Food I have ever tasted. 1 | |

| Pretty cool I would say. 1 | |

| Definitely a turn off for me & i doubt I'll be back unless someone else is buying. 0 | |

| Server did a great job handling our large rowdy table. 1 | |

| I find wasting food to be despicable, but this just wasn't food. 0 | |

| My wife had the Lobster Bisque soup which was lukewarm. 0 | |

| Would come back again if I had a sushi craving while in Vegas. 1 | |

| The staff are great, the ambiance is great. 1 | |

| He deserves 5 stars. 1 | |

| I left with a stomach ache and felt sick the rest of the day. 0 | |

| They dropped more than the ball. 0 | |

| The dining space is tiny, but elegantly decorated and comfortable. 1 | |

| They will customize your order any way you'd like, my usual is Eggplant with Green Bean stir fry, love it! 1 | |

| And the beans and rice were mediocre at best. 0 | |

| Best tacos in town by far!! 1 | |

| I took back my money and got outta there. 0 | |

| In an interesting part of town, this place is amazing. 1 | |

| RUDE & INCONSIDERATE MANAGEMENT. 0 | |

| The staff are now not as friendly, the wait times for being served are horrible, no one even says hi for the first 10 minutes. 0 | |

| I won't be back. 0 | |

| They have great dinners. 1 | |

| The service was outshining & I definitely recommend the Halibut. 1 | |

| The food was terrible. 0 | |

| WILL NEVER EVER GO BACK AND HAVE TOLD MANY PEOPLE WHAT HAD HAPPENED. 0 | |

| I don't recommend unless your car breaks down in front of it and you are starving. 0 | |

| I will come back here every time I'm in Vegas. 1 | |

| This place deserves one star and 90% has to do with the food. 0 | |

| This is a disgrace. 0 | |

| Def coming back to bowl next time 1 | |

| If you want healthy authentic or ethic food, try this place. 1 | |

| I will continue to come here on ladies night andddd date night ... highly recommend this place to anyone who is in the area. 1 | |

| I have been here several times in the past, and the experience has always been great. 1 | |

| We walked away stuffed and happy about our first Vegas buffet experience. 1 | |

| Service was excellent and prices are pretty reasonable considering this is Vegas and located inside the Crystals shopping mall by Aria. 1 | |

| To summarize... the food was incredible, nay, transcendant... but nothing brings me joy quite like the memory of the pneumatic condiment dispenser. 1 | |

| I'm probably one of the few people to ever go to Ians and not like it. 0 | |

| Kids pizza is always a hit too with lots of great side dish options for the kiddos! 1 | |

| Service is perfect and the family atmosphere is nice to see. 1 | |

| Cooked to perfection and the service was impeccable. 1 | |

| This one is simply a disappointment. 0 | |

| Overall, I was very disappointed with the quality of food at Bouchon. 0 | |

| I don't have to be an accountant to know I'm getting screwed! 0 | |

| Great place to eat, reminds me of the little mom and pop shops in the San Francisco Bay Area. 1 | |

| Today was my first taste of a Buldogis Gourmet Hot Dog and I have to tell you it was more than I ever thought possible. 1 | |

| Left very frustrated. 0 | |

| I'll definitely be in soon again. 1 | |

| Food was really good and I got full petty fast. 1 | |

| Service was fantastic. 1 | |

| TOTAL WASTE OF TIME. 0 | |

| I don't know what kind it is but they have the best iced tea. 1 | |

| Come hungry, leave happy and stuffed! 1 | |

| For service, I give them no stars. 0 | |

| I can assure you that you won't be disappointed. 1 | |

| I can take a little bad service but the food sucks. 0 | |

| Gave up trying to eat any of the crust (teeth still sore). 0 | |

| But now I was completely grossed out. 0 | |

| I really enjoyed eating here. 1 | |

| First time going but I think I will quickly become a regular. 1 | |

| Our server was very nice, and even though he looked a little overwhelmed with all of our needs, he stayed professional and friendly until the end. 1 | |

| From what my dinner companions told me...everything was very fresh with nice texture and taste. 1 | |

| On the ground, right next to our table was a large, smeared, been-stepped-in-and-tracked-everywhere pile of green bird poop. 0 | |

| Furthermore, you can't even find hours of operation on the website! 0 | |

| We've tried to like this place but after 10+ times I think we're done with them. 0 | |

| What a mistake that was! 0 | |

| No complaints! 1 | |

| This is some seriously good pizza and I'm an expert/connisseur on the topic. 1 | |

| Waiter was a jerk. 0 | |

| Strike 2, who wants to be rushed. 0 | |

| These are the nicest restaurant owners I've ever come across. 1 | |

| I never come again. 0 | |

| We loved the biscuits!!! 1 | |

| Service is quick and friendly. 1 | |

| Ordered an appetizer and took 40 minutes and then the pizza another 10 minutes. 0 | |

| So absolutley fantastic. 1 | |

| It was a huge awkward 1.5lb piece of cow that was 3/4ths gristle and fat. 0 | |

| definitely will come back here again. 1 | |

| I like Steiners because it's dark and it feels like a bar. 1 | |

| Wow very spicy but delicious. 1 | |

| If you're not familiar, check it out. 1 | |

| I'll take my business dinner dollars elsewhere. 0 | |

| I'd love to go back. 1 | |

| Anyway, this FS restaurant has a wonderful breakfast/lunch. 1 | |

| Nothing special. 0 | |

| Each day of the week they have a different deal and it's all so delicious! 1 | |

| Not to mention the combination of pears, almonds and bacon is a big winner! 1 | |

| Will not be back. 0 | |

| Sauce was tasteless. 0 | |

| The food is delicious and just spicy enough, so be sure to ask for spicier if you prefer it that way. 1 | |

| My ribeye steak was cooked perfectly and had great mesquite flavor. 1 | |

| I don't think we'll be going back anytime soon. 0 | |

| Food was so gooodd. 1 | |

| I am far from a sushi connoisseur but I can definitely tell the difference between good food and bad food and this was certainly bad food. 0 | |

| I was so insulted. 0 | |

| The last 3 times I had lunch here has been bad. 0 | |

| The chicken wings contained the driest chicken meat I have ever eaten. 0 | |

| The food was very good and I enjoyed every mouthful, an enjoyable relaxed venue for couples small family groups etc. 1 | |

| Nargile - I think you are great. 1 | |

| Best tater tots in the southwest. 1 | |

| We loved the place. 1 | |

| Definitely not worth the $3 I paid. 0 | |

| The vanilla ice cream was creamy and smooth while the profiterole (choux) pastry was fresh enough. 1 | |

| Im in AZ all the time and now have my new spot. 1 | |

| The manager was the worst. 0 | |

| The inside is really quite nice and very clean. 1 | |

| The food was outstanding and the prices were very reasonable. 1 | |

| I don't think I'll be running back to Carly's anytime soon for food. 0 | |

| This is was due to the fact that it took 20 minutes to be acknowledged, then another 35 minutes to get our food...and they kept forgetting things. 0 | |

| Love the margaritas, too! 1 | |

| This was my first and only Vegas buffet and it did not disappoint. 1 | |

| Very good, though! 1 | |

| The one down note is the ventilation could use some upgrading. 0 | |

| Great pork sandwich. 1 | |

| Don't waste your time here. 0 | |

| Total letdown, I would much rather just go to the Camelback Flower Shop and Cartel Coffee. 0 | |

| Third, the cheese on my friend's burger was cold. 0 | |

| We enjoy their pizza and brunch. 1 | |

| The steaks are all well trimmed and also perfectly cooked. 1 | |

| We had a group of 70+ when we claimed we would only have 40 and they handled us beautifully. 1 | |

| I LOVED it! 1 | |

| We asked for the bill to leave without eating and they didn't bring that either. 0 | |

| This place is a jewel in Las Vegas, and exactly what I've been hoping to find in nearly ten years living here. 1 | |

| Seafood was limited to boiled shrimp and crab legs but the crab legs definitely did not taste fresh. 0 | |

| The selection of food was not the best. 0 | |

| Delicious and I will absolutely be back! 1 | |

| This isn't a small family restaurant, this is a fine dining establishment. 1 | |

| They had a toro tartare with a cavier that was extraordinary and I liked the thinly sliced wagyu with white truffle. 1 | |

| I dont think I will be back for a very long time. 0 | |

| It was attached to a gas station, and that is rarely a good sign. 0 | |

| How awesome is that. 1 | |

| I will be back many times soon. 1 | |

| The menu had so much good stuff on it i could not decide! 1 | |

| Worse of all, he humiliated his worker right in front of me..Bunch of horrible name callings. 0 | |

| CONCLUSION: Very filling meals. 1 | |

| Their daily specials are always a hit with my group. 1 | |

| And then tragedy struck. 0 | |

| The pancake was also really good and pretty large at that. 1 | |

| This was my first crawfish experience, and it was delicious! 1 | |

| Their monster chicken fried steak and eggs is my all time favorite. 1 | |

| Waitress was sweet and funny. 1 | |

| I also had to taste my Mom's multi-grain pumpkin pancakes with pecan butter and they were amazing, fluffy, and delicious! 1 | |

| I'd rather eat airline food, seriously. 0 | |

| Cant say enough good things about this place. 1 | |

| The ambiance was incredible. 1 | |

| The waitress and manager are so friendly. 1 | |

| I would not recommend this place. 0 | |

| Overall I wasn't very impressed with Noca. 0 | |

| My gyro was basically lettuce only. 0 | |

| Terrible service! 0 | |

| Thoroughly disappointed! 0 | |

| I don't each much pasta, but I love the homemade /hand made pastas and thin pizzas here. 1 | |

| Give it a try, you will be happy you did. 1 | |

| By far the BEST cheesecurds we have ever had! 1 | |

| Reasonably priced also! 1 | |

| Everything was perfect the night we were in. 1 | |

| The food is very good for your typical bar food. 1 | |

| it was a drive to get there. 0 | |

| At first glance it is a lovely bakery cafe - nice ambiance, clean, friendly staff. 1 | |

| Anyway, I do not think i will go back there. 0 | |

| Point your finger at any item on the menu, order it and you won't be disappointed. 1 | |

| Oh this is such a thing of beauty, this restaurant. 1 | |

| If you haven't gone here GO NOW! 1 | |

| A greasy, unhealthy meal. 0 | |

| first time there and might just be the last. 0 | |

| Those burgers were amazing. 1 | |

| Similarly, the delivery man did not say a word of apology when our food was 45 minutes late. 0 | |

| And it was way to expensive. 0 | |

| Be sure to order dessert, even if you need to pack it to-go - the tiramisu and cannoli are both to die for. 1 | |

| This was my first time and I can't wait until the next. 1 | |

| The bartender was also nice. 1 | |

| Everything was good and tasty! 1 | |

| This place is two thumbs up....way up. 1 | |

| The best place in Vegas for breakfast (just check out a Sat, or Sun. 1 | |

| If you love authentic Mexican food and want a whole bunch of interesting, yet delicious meats to choose from, you need to try this place. 1 | |

| Terrible management. 0 | |

| An excellent new restaurant by an experienced Frenchman. 1 | |

| If there were zero stars I would give it zero stars. 0 | |

| Great steak, great sides, great wine, amazing desserts. 1 | |

| Worst martini ever! 0 | |

| The steak and the shrimp are in my opinion the best entrees at GC. 1 | |

| I had the opportunity today to sample your amazing pizzas! 1 | |

| We waited for thirty minutes to be seated (although there were 8 vacant tables and we were the only folks waiting). 0 | |

| The yellowtail carpaccio was melt in your mouth fresh. 1 | |

| I won't try going back there even if it's empty. 0 | |

| No, I'm going to eat the potato that I found some strangers hair in it. 0 | |

| Just spicy enough.. Perfect actually. 1 | |

| Last night was my second time dining here and I was so happy I decided to go back! 1 | |

| not even a "hello, we will be right with you." 0 | |

| The desserts were a bit strange. 0 | |

| My boyfriend and I came here for the first time on a recent trip to Vegas and could not have been more pleased with the quality of food and service. 1 | |

| I really do recommend this place, you can go wrong with this donut place! 1 | |

| Nice ambiance. 1 | |

| I would recommend saving room for this! 1 | |

| I guess maybe we went on an off night but it was disgraceful. 0 | |

| However, my recent experience at this particular location was not so good. 0 | |

| I know this is not like the other restaurants at all, something is very off here! 0 | |

| AVOID THIS ESTABLISHMENT! 0 | |

| I think this restaurant suffers from not trying hard enough. 0 | |

| All of the tapas dishes were delicious! 1 | |

| I *heart* this place. 1 | |

| My salad had a bland vinegrette on the baby greens and hearts of Palm. 0 | |

| After two I felt disgusting. 0 | |

| A good time! 1 | |

| I believe that this place is a great stop for those with a huge belly and hankering for sushi. 1 | |

| Generous portions and great taste. 1 | |

| I will never go back to this place and will never ever recommended this place to anyone! 0 | |

| The servers went back and forth several times, not even so much as an "Are you being helped?" 0 | |

| Food was delicious! 1 | |

| AN HOUR... seriously? 0 | |

| I consider this theft. 0 | |

| Eew... This location needs a complete overhaul. 0 | |

| We recently witnessed her poor quality of management towards other guests as well. 0 | |

| Waited and waited and waited. 0 | |

| He also came back to check on us regularly, excellent service. 1 | |

| Our server was super nice and checked on us many times. 1 | |

| The pizza tasted old, super chewy in not a good way. 0 | |

| I swung in to give them a try but was deeply disappointed. 0 | |

| Service was good and the company was better! 1 | |

| The staff are also very friendly and efficient. 1 | |

| As for the service: I'm a fan, because it's quick and you're being served by some nice folks. 1 | |

| Boy was that sucker dry!!. 0 | |

| Over rated. 0 | |

| If you look for authentic Thai food, go else where. 0 | |

| Their steaks are 100% recommended! 1 | |

| After I pulled up my car I waited for another 15 minutes before being acknowledged. 0 | |

| Great food and great service in a clean and friendly setting. 1 | |

| All in all, I can assure you I'll be back. 1 | |

| I hate those things as much as cheap quality black olives. 0 | |

| My breakfast was perpared great, with a beautiful presentation of 3 giant slices of Toast, lightly dusted with powdered sugar. 1 | |

| The kids play area is NASTY! 0 | |

| Great place fo take out or eat in. 1 | |

| The waitress was friendly and happy to accomodate for vegan/veggie options. 1 | |

| OMG I felt like I had never eaten Thai food until this dish. 1 | |

| It was extremely "crumby" and pretty tasteless. 0 | |

| It was a pale color instead of nice and char and has NO flavor. 0 | |

| The croutons also taste homemade which is an extra plus. 1 | |

| I got home to see the driest damn wings ever! 0 | |

| It'll be a regular stop on my trips to Phoenix! 1 | |

| I really enjoyed Crema Café before they expanded. I even told friends they had the BEST breakfast. 1 | |

| Not good for the money. 0 | |

| I miss it and wish they had one in Philadelphia! 1 | |

| We got sitting fairly fast, but, ended up waiting 40 minutes just to place our order, another 30 minutes before the food arrived. 0 | |

| They also have the best cheese crisp in town. 1 | |

| Good value, great food, great service. 1 | |

| Couldn't ask for a more satisfying meal. 1 | |

| The food is good. 1 | |

| It was awesome. 1 | |

| I just wanted to leave. 0 | |

| We made the drive all the way from North Scottsdale... and I was not one bit disappointed! 1 | |

| I will not be eating there again. 0 | |

| !....THE OWNERS REALLY REALLY need to quit being soooooo cheap let them wrap my freaking sandwich in two papers not one! 0 | |

| I checked out this place a couple years ago and was not impressed. 0 | |

| The chicken I got was definitely reheated and was only ok, the wedges were cold and soggy. 0 | |

| Sorry, I will not be getting food from here anytime soon :( 0 | |

| An absolute must visit! 1 | |

| The cow tongue and cheek tacos are amazing. 1 | |

| My friend did not like his Bloody Mary. 0 | |

| Despite how hard I rate businesses, its actually rare for me to give a 1 star. 0 | |

| They really want to make your experience a good one. 1 | |

| I will not return. 0 | |

| I had the chicken Pho and it tasted very bland. 0 | |

| Very disappointing!!! 0 | |

| The grilled chicken was so tender and yellow from the saffron seasoning. 1 | |

| a drive thru means you do not want to wait around for half an hour for your food, but somehow when we end up going here they make us wait and wait. 0 | |

| Pretty awesome place. 1 | |

| Ambience is perfect. 1 | |

| Best of luck to the rude and non-customer service focused new management. 0 | |

| Any grandmother can make a roasted chicken better than this one. 0 | |

| I asked multiple times for the wine list and after some time of being ignored I went to the hostess and got one myself. 0 | |

| The staff is always super friendly and helpful, which is especially cool when you bring two small boys and a baby! 1 | |

| Four stars for the food & the guy in the blue shirt for his great vibe & still letting us in to eat ! 1 | |

| The roast beef sandwich tasted really good! 1 | |

| Same evening, him and I are both drastically sick. 0 | |

| High-quality chicken on the chicken Caesar salad. 1 | |

| Ordered burger rare came in we'll done. 0 | |

| We were promptly greeted and seated. 1 | |

| Tried to go here for lunch and it was a madhouse. 0 | |

| I was proven dead wrong by this sushi bar, not only because the quality is great, but the service is fast and the food, impeccable. 1 | |

| After waiting an hour and being seated, I was not in the greatest of moods. 0 | |

| This is a good joint. 1 | |

| The Macarons here are insanely good. 1 | |

| I'm not eating here! 0 | |

| Our waiter was very attentive, friendly, and informative. 1 | |

| Maybe if they weren't cold they would have been somewhat edible. 0 | |

| This place has a lot of promise but fails to deliver. 0 | |

| Very bad Experience! 0 | |

| What a mistake. 0 | |

| Food was average at best. 0 | |

| Great food. 1 | |

| We won't be going back anytime soon! 0 | |

| Very Very Disappointed ordered the $35 Big Bay Plater. 0 | |

| Great place to relax and have an awesome burger and beer. 1 | |

| It is PERFECT for a sit-down family meal or get together with a few friends. 1 | |

| Not much flavor to them, and very poorly constructed. 0 | |

| The patio seating was very comfortable. 1 | |

| The fried rice was dry as well. 0 | |

| Hands down my favorite Italian restaurant! 1 | |

| That just SCREAMS "LEGIT" in my book...somethat's also pretty rare here in Vegas. 1 | |

| It was just not a fun experience. 1 | |

| The atmosphere was great with a lovely duo of violinists playing songs we requested. 1 | |

| I personally love the hummus, pita, baklava, falafels and Baba Ganoush (it's amazing what they do with eggplant!). 1 | |

| Very convenient, since we were staying at the MGM! 1 | |

| The owners are super friendly and the staff is courteous. 1 | |

| Both great! 1 | |

| Eclectic selection. 1 | |

| The sweet potato tots were good but the onion rings were perfection or as close as I have had. 1 | |

| The staff was very attentive. 1 | |

| And the chef was generous with his time (even came around twice so we can take pictures with him). 1 | |

| The owner used to work at Nobu, so this place is really similar for half the price. 1 | |

| Google mediocre and I imagine Smashburger will pop up. 0 | |

| dont go here. 0 | |

| I promise they won't disappoint. 1 | |

| As a sushi lover avoid this place by all means. 0 | |

| What a great double cheeseburger! 1 | |

| Awesome service and food. 1 | |

| A fantastic neighborhood gem !!! 1 | |

| I can't wait to go back. 1 | |

| The plantains were the worst I've ever tasted. 0 | |

| It's a great place and I highly recommend it. 1 | |

| Service was slow and not attentive. 0 | |

| I gave it 5 stars then, and I'm giving it 5 stars now. 1 | |

| Your staff spends more time talking to themselves than me. 0 | |

| Dessert: Panna Cotta was amazing. 1 | |

| Very good food, great atmosphere.1 1 | |

| Damn good steak. 1 | |

| Total brunch fail. 0 | |

| Prices are very reasonable, flavors are spot on, the sauce is home made, and the slaw is not drenched in mayo. 1 | |

| The decor is nice, and the piano music soundtrack is pleasant. 1 | |

| The steak was amazing...rge fillet relleno was the best seafood plate i have ever had! 1 | |

| Good food , good service . 1 | |

| It was absolutely amazing. 1 | |

| I probably won't be back, to be honest. 0 | |

| will definitely be back! 1 | |

| The sergeant pepper beef sandwich with auju sauce is an excellent sandwich as well. 1 | |

| Hawaiian Breeze, Mango Magic, and Pineapple Delight are the smoothies that I've tried so far and they're all good. 1 | |

| Went for lunch - service was slow. 0 | |

| We had so much to say about the place before we walked in that he expected it to be amazing, but was quickly disappointed. 0 | |

| I was mortified. 0 | |

| Needless to say, we will never be back here again. 0 | |

| Anyways, The food was definitely not filling at all, and for the price you pay you should expect more. 0 | |

| The chips that came out were dripping with grease, and mostly not edible. 0 | |

| I wasn't really impressed with Strip Steak. 0 | |

| Have been going since 2007 and every meal has been awesome!! 1 | |

| Our server was very nice and attentive as were the other serving staff. 1 | |

| The cashier was friendly and even brought the food out to me. 1 | |

| I work in the hospitality industry in Paradise Valley and have refrained from recommending Cibo any longer. 0 | |

| The atmosphere here is fun. 1 | |

| Would not recommend to others. 0 | |

| Service is quick and even "to go" orders are just like we like it! 1 | |

| I mean really, how do you get so famous for your fish and chips when it's so terrible!?! 0 | |

| That said, our mouths and bellies were still quite pleased. 1 | |

| Not my thing. 0 | |

| 2 Thumbs Up!! 1 | |

| If you are reading this please don't go there. 0 | |

| I loved the grilled pizza, reminded me of legit Italian pizza. 1 | |

| Only Pros : Large seating area/ Nice bar area/ Great simple drink menu/ The BEST brick oven pizza with homemade dough! 1 | |

| They have a really nice atmosphere. 1 | |

| Tonight I had the Elk Filet special...and it sucked. 0 | |

| After one bite, I was hooked. 1 | |

| We ordered some old classics and some new dishes after going there a few times and were sorely disappointed with everything. 0 | |

| Cute, quaint, simple, honest. 1 | |

| The chicken was deliciously seasoned and had the perfect fry on the outside and moist chicken on the inside. 1 | |

| The food was great as always, compliments to the chef. 1 | |

| Special thanks to Dylan T. for the recommendation on what to order :) All yummy for my tummy. 1 | |

| Awesome selection of beer. 1 | |

| Great food and awesome service! 1 | |

| One nice thing was that they added gratuity on the bill since our party was larger than 6 or 8, and they didn't expect more tip than that. 1 | |

| A FLY was in my apple juice.. A FLY!!!!!!!! 0 | |

| The Han Nan Chicken was also very tasty. 1 | |

| As for the service, I thought it was good. 1 | |

| The food was barely lukewarm, so it must have been sitting waiting for the server to bring it out to us. 0 | |

| Ryan's Bar is definitely one Edinburgh establishment I won't be revisiting. 0 | |

| Nicest Chinese restaurant I've been in a while. 1 | |

| Overall, I like there food and the service. 1 | |

| They also now serve Indian naan bread with hummus and some spicy pine nut sauce that was out of this world. 1 | |

| Probably never coming back, and wouldn't recommend it. 0 | |

| Friend's pasta -- also bad, he barely touched it. 0 | |

| Try them in the airport to experience some tasty food and speedy, friendly service. 1 | |

| I love the decor with the Chinese calligraphy wall paper. 1 | |

| Never had anything to complain about here. 1 | |

| The restaurant is very clean and has a family restaurant feel to it. 1 | |

| It was way over fried. 0 | |

| I'm not sure how long we stood there but it was long enough for me to begin to feel awkwardly out of place. 0 | |

| When I opened the sandwich, I was impressed, but not in a good way. 0 | |

| Will not be back! 0 | |

| There was a warm feeling with the service and I felt like their guest for a special treat. 1 | |

| An extensive menu provides lots of options for breakfast. 1 | |

| I always order from the vegetarian menu during dinner, which has a wide array of options to choose from. 1 | |

| I have watched their prices inflate, portions get smaller and management attitudes grow rapidly! 0 | |

| Wonderful lil tapas and the ambience made me feel all warm and fuzzy inside. 1 | |

| I got to enjoy the seafood salad, with a fabulous vinegrette. 1 | |

| The wontons were thin, not thick and chewy, almost melt in your mouth. 1 | |

| Level 5 spicy was perfect, where spice didn't over-whelm the soup. 1 | |

| We were sat right on time and our server from the get go was FANTASTIC! 1 | |

| Main thing I didn't enjoy is that the crowd is of older crowd, around mid 30s and up. 0 | |

| When I'm on this side of town, this will definitely be a spot I'll hit up again! 1 | |

| I had to wait over 30 minutes to get my drink and longer to get 2 arepas. 0 | |

| This is a GREAT place to eat! 1 | |

| The jalapeno bacon is soooo good. 1 | |

| The service was poor and thats being nice. 0 | |

| Food was good, service was good, Prices were good. 1 | |

| The place was not clean and the food oh so stale! 0 | |

| The chicken dishes are OK, the beef is like shoe leather. 0 | |

| But the service was beyond bad. 0 | |

| I'm so happy to be here!!!" 1 | |

| Tasted like dirt. 0 | |

| One of the few places in Phoenix that I would definately go back to again . 1 | |

| The block was amazing. 1 | |

| It's close to my house, it's low-key, non-fancy, affordable prices, good food. 1 | |

| * Both the Hot & Sour & the Egg Flower Soups were absolutely 5 Stars! 1 | |

| My sashimi was poor quality being soggy and tasteless. 0 | |

| Great time - family dinner on a Sunday night. 1 | |

| the food is not tasty at all, not to say its "real traditional Hunan style". 0 | |

| What did bother me, was the slow service. 0 | |

| The flair bartenders are absolutely amazing! 1 | |

| Their frozen margaritas are WAY too sugary for my taste. 0 | |

| These were so good we ordered them twice. 1 | |

| So in a nutshell: 1) The restaraunt smells like a combination of a dirty fish market and a sewer. 0 | |

| My girlfriend's veal was very bad. 0 | |

| Unfortunately, it was not good. 0 | |

| I had a pretty satifying experience. 1 | |

| Join the club and get awesome offers via email. 1 | |

| Perfect for someone (me) who only likes beer ice cold, or in this case, even colder. 1 | |

| Bland and flavorless is a good way of describing the barely tepid meat. 0 | |

| The chains, which I'm no fan of, beat this place easily. 0 | |

| The nachos are a MUST HAVE! 1 | |

| We will not be coming back. 0 | |

| I don't have very many words to say about this place, but it does everything pretty well. 1 | |

| The staff is super nice and very quick even with the crazy crowds of the downtown juries, lawyers, and court staff. 1 | |

| Great atmosphere, friendly and fast service. 1 | |

| When I received my Pita it was huge it did have a lot of meat in it so thumbs up there. 1 | |

| Once your food arrives it's meh. 0 | |

| Paying $7.85 for a hot dog and fries that looks like it came out of a kid's meal at the Wienerschnitzel is not my idea of a good meal. 0 | |

| The classic Maine Lobster Roll was fantastic. 1 | |

| My brother in law who works at the mall ate here same day, and guess what he was sick all night too. 0 | |

| So good I am going to have to review this place twice - once hereas a tribute to the place and once as a tribute to an event held here last night. 1 | |

| The chips and salsa were really good, the salsa was very fresh. 1 | |

| This place is great!!!!!!!!!!!!!! 1 | |

| Mediocre food. 0 | |

| Once you get inside you'll be impressed with the place. 1 | |

| I'm super pissd. 0 | |

| And service was super friendly. 1 | |

| Why are these sad little vegetables so overcooked? 0 | |

| This place was such a nice surprise! 1 | |

| They were golden-crispy and delicious. 1 | |

| I had high hopes for this place since the burgers are cooked over a charcoal grill, but unfortunately the taste fell flat, way flat. 0 | |

| I could eat their bruschetta all day it is devine. 1 | |

| Not a single employee came out to see if we were OK or even needed a water refill once they finally served us our food. 0 | |

| Lastly, the mozzarella sticks, they were the best thing we ordered. 1 | |

| The first time I ever came here I had an amazing experience, I still tell people how awesome the duck was. 1 | |

| The server was very negligent of our needs and made us feel very unwelcome... I would not suggest this place! 0 | |

| The service was terrible though. 0 | |

| This place is overpriced, not consistent with their boba, and it really is OVERPRICED! 0 | |

| It was packed!! 0 | |

| I love this place. 1 | |

| I can say that the desserts were yummy. 1 | |

| The food was terrible. 0 | |

| The seasonal fruit was fresh white peach puree. 1 | |

| It kept getting worse and worse so now I'm officially done. 0 | |

| This place should honestly be blown up. 0 | |

| But I definitely would not eat here again. 0 | |

| Do not waste your money here! 0 | |

| I love that they put their food in nice plastic containers as opposed to cramming it in little paper takeout boxes. 1 | |

| The crêpe was delicate and thin and moist. 1 | |

| Awful service. 0 | |

| Won't ever go here again. 0 | |

| Food quality has been horrible. 0 | |

| For that price I can think of a few place I would have much rather gone. 0 | |

| The service here is fair at best. 0 | |

| I do love sushi, but I found Kabuki to be over-priced, over-hip and under-services. 0 | |

| Do yourself a favor and stay away from this dish. 0 | |

| Very poor service. 0 | |

| No one at the table thought the food was above average or worth the wait that we had for it. 0 | |

| Best service and food ever, Maria our server was so good and friendly she made our day. 1 | |

| They were excellent. 1 | |

| I paid the bill but did not tip because I felt the server did a terrible job. 0 | |

| Just had lunch here and had a great experience. 1 | |

| I have never had such bland food which surprised me considering the article we read focused so much on their spices and flavor. 0 | |

| Food is way overpriced and portions are fucking small. 0 | |

| I recently tried Caballero's and I have been back every week since! 1 | |

| for 40 bucks a head, i really expect better food. 0 | |

| The food came out at a good pace. 1 | |

| I ate there twice on my last visit, and especially enjoyed the salmon salad. 1 | |

| I won't be back. 0 | |

| We could not believe how dirty the oysters were! 0 | |

| This place deserves no stars. 0 | |

| I would not recommend this place. 0 | |

| In fact I'm going to round up to 4 stars, just because she was so awesome. 1 | |

| To my disbelief, each dish qualified as the worst version of these foods I have ever tasted. 0 | |

| Bad day or not, I have a very low tolerance for rude customer service people, it is your job to be nice and polite, wash dishes otherwise!! 0 | |

| the potatoes were great and so was the biscuit. 1 | |

| I probably would not go here again. 0 | |

| So flavorful and has just the perfect amount of heat. 1 | |

| The price is reasonable and the service is great. 1 | |

| The Wife hated her meal (coconut shrimp), and our friends really did not enjoy their meals, either. 0 | |

| My fella got the huevos rancheros and they didn't look too appealing. 0 | |

| Went in for happy hour, great list of wines. 1 | |

| Some may say this buffet is pricey but I think you get what you pay for and this place you are getting quite a lot! 1 | |

| I probably won't be coming back here. 0 | |

| Worst food/service I've had in a while. 0 | |

| This place is pretty good, nice little vibe in the restaurant. 1 | |

| Talk about great customer service of course we will be back. 1 | |

| Hot dishes are not hot, cold dishes are close to room temp.I watched staff prepare food with BARE HANDS, no gloves.Everything is deep fried in oil. 0 | |

| I love their fries and their beans. 1 | |

| Always a pleasure dealing with him. 1 | |

| They have a plethora of salads and sandwiches, and everything I've tried gets my seal of approval. 1 | |

| This place is awesome if you want something light and healthy during the summer. 1 | |

| For sushi on the Strip, this is the place to go. 1 | |

| The service was great, even the manager came and helped with our table. 1 | |

| The feel of the dining room was more college cooking course than high class dining and the service was slow at best. 0 | |

| I started this review with two stars, but I'm editing it to give it only one. 0 | |

| this is the worst sushi i have ever eat besides Costco's. 0 | |

| All in all an excellent restaurant highlighted by great service, a unique menu, and a beautiful setting. 1 | |

| My boyfriend and i sat at the bar and had a completely delightful experience. 1 | |

| Weird vibe from owners. 0 | |

| There was hardly any meat. 0 | |

| I've had better bagels from the grocery store. 0 | |

| Go To Place for Gyros. 1 | |

| I love the owner/chef, his one authentic Japanese cool dude! 1 | |

| Now the burgers aren't as good, the pizza which used to be amazing is doughy and flavorless. 0 | |

| I found a six inch long piece of wire in my salsa. 0 | |

| The service was terrible, food was mediocre. 0 | |

| We definately enjoyed ourselves. 1 | |

| I ordered Albondigas soup - which was just warm - and tasted like tomato soup with frozen meatballs. 0 | |

| On three different occasions I asked for well done or medium well, and all three times I got the bloodiest piece of meat on my plate. 0 | |

| I had about two bites and refused to eat anymore. 0 | |

| The service was extremely slow. 0 | |

| After 20 minutes wait, I got a table. 0 | |

| Seriously killer hot chai latte. 1 | |

| No allergy warnings on the menu, and the waitress had absolutely no clue as to which meals did or did not contain peanuts. 0 | |

| My boyfriend tried the Mediterranean Chicken Salad and fell in love. 1 | |

| Their rotating beers on tap is also a highlight of this place. 1 | |

| Pricing is a bit of a concern at Mellow Mushroom. 0 | |

| Worst Thai ever. 0 | |

| If you stay in Vegas you must get breakfast here at least once. 1 | |

| I want to first say our server was great and we had perfect service. 1 | |

| The pizza selections are good. 1 | |

| I had strawberry tea, which was good. 1 | |

| Highly unprofessional and rude to a loyal patron! 0 | |

| Overall, a great experience. 1 | |

| Spend your money elsewhere. 0 | |

| Their regular toasted bread was equally satisfying with the occasional pats of butter... Mmmm...! 1 | |

| The Buffet at Bellagio was far from what I anticipated. 0 | |

| And the drinks are WEAK, people! 0 | |

| -My order was not correct. 0 | |

| Also, I feel like the chips are bought, not made in house. 0 | |

| After the disappointing dinner we went elsewhere for dessert. 0 | |

| The chips and sals a here is amazing!!!!!!!!!!!!!!!!!!! 1 | |

| We won't be returning. 0 | |

| This is my new fav Vegas buffet spot. 1 | |

| I seriously cannot believe that the owner has so many unexperienced employees that all are running around like chickens with their heads cut off. 0 | |

| Very, very sad. 0 | |

| i felt insulted and disrespected, how could you talk and judge another human being like that? 0 | |

| How can you call yourself a steakhouse if you can't properly cook a steak, I don't understand! 0 | |

| I'm not impressed with the concept or the food. 0 | |

| The only thing I wasn't too crazy about was their guacamole as I don't like it puréed. 0 | |

| There is really nothing for me at postinos, hope your experience is better 0 | |

| I got food poisoning here at the buffet. 0 | |

| They brought a fresh batch of fries and I was thinking yay something warm but no! 0 | |

| What SHOULD have been a hilarious, yummy Christmas Eve dinner to remember was the biggest fail of the entire trip for us. 0 | |

| Needless to say, I won't be going back anytime soon. 0 | |

| This place is disgusting! 0 | |

| Every time I eat here, I see caring teamwork to a professional degree. 1 | |

| The RI style calamari was a joke. 0 | |

| However, there was so much garlic in the fondue, it was barely edible. 0 | |

| I could barely stomach the meal, but didn't complain because it was a business lunch. 0 | |

| It was so bad, I had lost the heart to finish it. 0 | |

| It also took her forever to bring us the check when we asked for it. 0 | |

| We aren't ones to make a scene at restaurants but I just don't get it...definitely lost the love after this one! 0 | |

| Disappointing experience. 0 | |

| The food is about on par with Denny's, which is to say, not good at all. 0 | |

| If you want to wait for mediocre food and downright terrible service, then this is the place for you. 0 | |

| WAAAAAAyyyyyyyyyy over rated is all I am saying. 0 | |

| We won't be going back. 0 | |

| The place was fairly clean but the food simply wasn't worth it. 0 | |

| This place lacked style!! 0 | |

| The sangria was about half of a glass wine full and was $12, ridiculous. 0 | |

| Don't bother coming here. 0 | |

| The meat was pretty dry, I had the sliced brisket and pulled pork. 0 | |

| The building itself seems pretty neat, the bathroom is pretty trippy, but I wouldn't eat here again. 0 | |

| It was equally awful. 0 | |

| Probably not in a hurry to go back. 0 | |

| very slow at seating even with reservation. 0 | |

| Not good by any stretch of the imagination. 0 | |

| The cashew cream sauce was bland and the vegetables were undercooked. 0 | |

| The chipolte ranch dipping sause was tasteless, seemed thin and watered down with no heat. 0 | |

| It was a bit too sweet, not really spicy enough, and lacked flavor. 0 | |

| I was VERY disappointed!! 0 | |

| This place is horrible and way overpriced. 0 | |

| Maybe it's just their Vegetarian fare, but I've been twice and I thought it was average at best. 0 | |

| It wasn't busy at all and now we know why. 0 | |

| The tables outside are also dirty a lot of the time and the workers are not always friendly and helpful with the menu. 0 | |

| The ambiance here did not feel like a buffet setting, but more of a douchey indoor garden for tea and biscuits. 0 | |

| Con: spotty service. 0 | |

| The fries were not hot, and neither was my burger. 0 | |

| But then they came back cold. 0 | |

| Then our food came out, disappointment ensued. 0 | |

| The real disappointment was our waiter. 0 | |

| My husband said she was very rude... did not even apologize for the bad food or anything. 0 | |

| The only reason to eat here would be to fill up before a night of binge drinking just to get some carbs in your stomach. 0 | |

| Insults, profound deuchebaggery, and had to go outside for a smoke break while serving just to solidify it. 0 | |

| If someone orders two tacos don't' you think it may be part of customer service to ask if it is combo or ala cart? 0 | |

| She was quite disappointed although some blame needs to be placed at her door. 0 | |

| After all the rave reviews I couldn't wait to eat here......what a disappointment! 0 | |

| Del Taco is pretty nasty and should be avoided if possible. 0 | |

| It's NOT hard to make a decent hamburger. 0 | |

| But I don't like it. 0 | |

| Hell no will I go back 0 | |

| We've have gotten a much better service from the pizza place next door than the services we received from this restaurant. 0 | |

| I don't know what the big deal is about this place, but I won't be back "ya'all". 0 | |

| I immediately said I wanted to talk to the manager but I did not want to talk to the guy who was doing shots of fireball behind the bar. 0 | |

| The ambiance isn't much better. 0 | |

| Unfortunately, it only set us up for disapppointment with our entrees. 0 | |

| The food wasn't good. 0 | |

| Your servers suck, wait, correction, our server Heimer sucked. 0 | |

| What happened next was pretty....off putting. 0 | |

| too bad cause I know it's family owned, I really wanted to like this place. 0 | |

| Overpriced for what you are getting. 0 | |

| I vomited in the bathroom mid lunch. 0 | |

| I kept looking at the time and it had soon become 35 minutes, yet still no food. 0 | |

| I have been to very few places to eat that under no circumstances would I ever return to, and this tops the list. 0 | |

| We started with the tuna sashimi which was brownish in color and obviously wasn't fresh. 0 | |

| Food was below average. 0 | |

| It sure does beat the nachos at the movies but I would expect a little bit more coming from a restaurant. 0 | |

| All in all, Ha Long Bay was a bit of a flop. 0 | |

| The problem I have is that they charge $11.99 for a sandwich that is no bigger than a Subway sub (which offers better and more amount of vegetables). 0 | |

| Shrimp- When I unwrapped it (I live only 1/2 a mile from Brushfire) it was literally ice cold. 0 | |

| It lacked flavor, seemed undercooked, and dry. 0 | |

| It really is impressive that the place hasn't closed down. 0 | |

| I would avoid this place if you are staying in the Mirage. 0 | |

| The refried beans that came with my meal were dried out and crusty and the food was bland. 0 | |

| Spend your money and time some place else. 0 | |

| A lady at the table next to us found a live green caterpillar In her salad. 0 | |

| the presentation of the food was awful. 0 | |

| I can't tell you how disappointed I was. 0 | |

| I think food should have flavor and texture and both were lacking. 0 | |

| Appetite instantly gone. 0 | |

| Overall I was not impressed and would not go back. 0 | |

| The whole experience was underwhelming, and I think we'll just go to Ninja Sushi next time. 0 | |

| Then, as if I hadn't wasted enough of my life there, they poured salt in the wound by drawing out the time it took to bring the check. 0 |

Libraries

Each tool has its own set of libraries to manage this task. They all more or less split up the necessary tasks into classes and namespaces.

Python

With Python, as usual, we have the three main libraries needed to perform machine learning tasks.

import numpy as np

import matplotlib.pyplot as plt

import pandas as pd

Further down the process we're also importing some classes and features from the Python language processing modules. Regular Expressions (re) and the Natural Language Took Kit (nltk).

import re

import nltk

ML.NET

ML.NET has the usual suspects and also a few libraries that are specific to text prepartion and consumption. The system libraries are purely for reading in the data from a file and managing collections. The Microsoft ML libraries are the interesting ones. Probably the using that really stands out is the static Microsoft.ML.DataOperationsCatalog. This library is basically responsible for loading our data and performing the train and test split. If you attempt to use this library without the static keyword then the compiler will provide an error and suggest a resolution. Pretty nice, right?

using System;

using System.Collections.Generic;

using System.IO;

using System.Linq;

using Microsoft.ML;

using Microsoft.ML.Data;

using static Microsoft.ML.DataOperationsCatalog;

using Microsoft.ML.Trainers;

using Microsoft.ML.Transforms.Text;

Apache Spark

Apache Spark requires a fair few classes from two separate Libraries. The Apache Spark library provide a base for machine learning while the John Snow Labs library adds natural language processing capabilities. (Note as of writing this article John Snow Labs NLP library only work with Java 8)

import com.johnsnowlabs.nlp.DocumentAssembler;

import com.johnsnowlabs.nlp.LightPipeline;

import com.johnsnowlabs.nlp.SparkNLP;

import com.johnsnowlabs.nlp.annotators.Tokenizer;

import com.johnsnowlabs.nlp.annotators.sda.vivekn.ViveknSentimentApproach;

import com.johnsnowlabs.nlp.pretrained.PretrainedPipeline;

import com.mj.machine.learning.spark.entity.NaturalLanguageProcessorSentiment;

import lombok.extern.slf4j.Slf4j;

import org.apache.spark.api.java.JavaSparkContext;

import org.apache.spark.ml.Pipeline;

import org.apache.spark.ml.PipelineModel;

import org.apache.spark.ml.PipelineStage;

import org.apache.spark.sql.Dataset;

import org.apache.spark.sql.Row;

import org.apache.spark.sql.SparkSession;

import org.springframework.stereotype.Service;

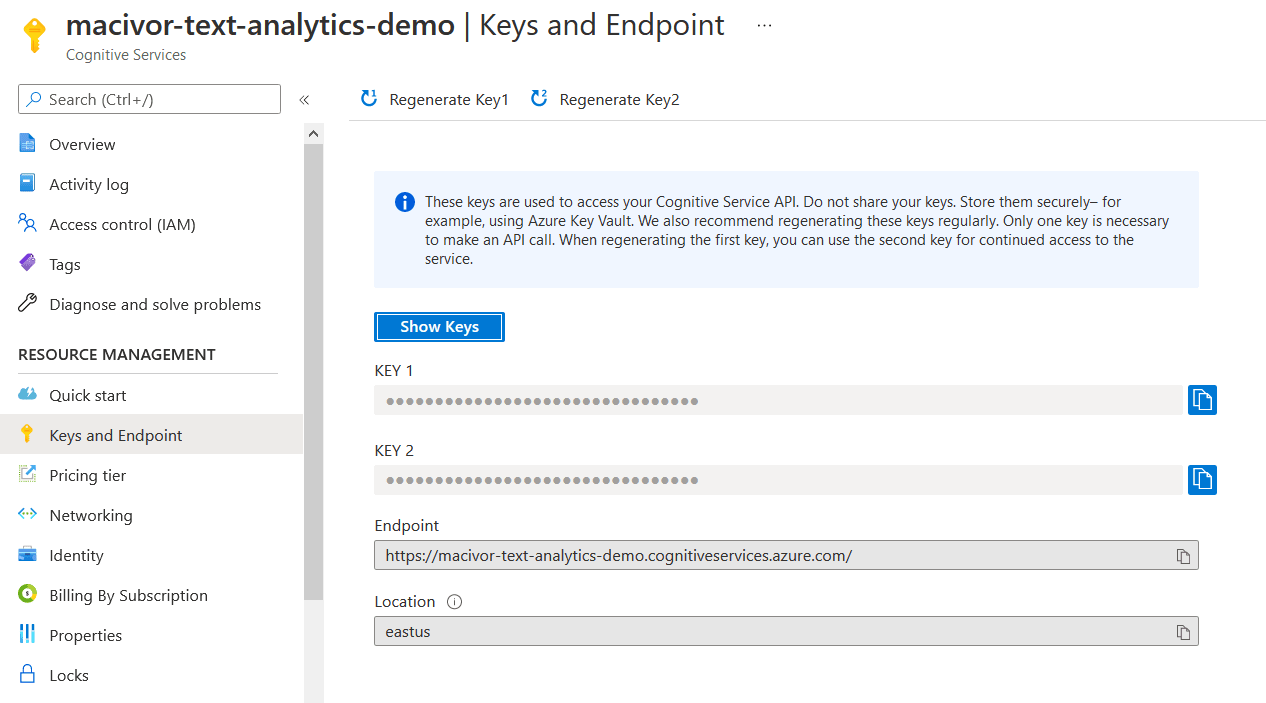

Azure Cognitive Services

Azure Cognitive Services requires the fewest libraries which makes sense considering we'll be offloading all of the model building to an Azure service.

using Azure;

using System;

using System.Globalization;

using Azure.AI.TextAnalytics;

Setup

Each of these ecosystems requires varying degrees of work to get setup.

For Apache Spark, feel free to fire up your favorite code editor, install Java 8, and try to get this running: See the code on Github.

For Python and ML.NET I've set up a couple of Binder projects for you to try them out.

Python

Surprisingly, the Python process is a little more hands on during the featurizing of the text. Here we need to add a parameter while loading in the data quoting = 3 which tells Python to ignore quotes while looking at the text.

Next, while iterating through the reviews, we need to remove "stopwords" which are described as articles (the, this, that, etc) and pronouns and the like. These words are removed since they don't carry any particular meaning that we need to interpret to get the sentiment. We are also using a class to change all words into a base type. So "loved" is changed to "love" for example. This allows us to reduce the size of the set of words without removing sentiment meaning. So, in this process even though "loved" is past tense it still conveys the same positive context as "love" and therefore there is little benefit in including both words in our set of words to be analyzed.

One interesting thing in this step is that we have to explicitly include the word 'not'. 'Not' is considered a stop word and would be removed - but it conveys sentiment so it should be included. It's an interesting detail in the Python implementation that more or less implies you need to pay close attention to the process here.

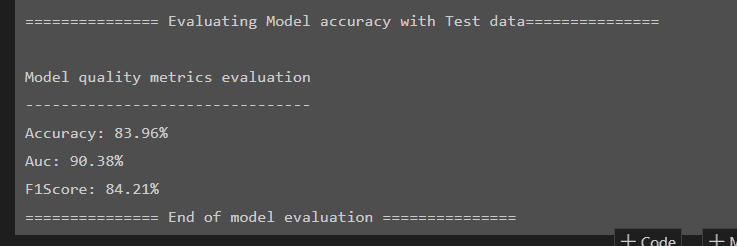

dataset = pd.read_csv('Restaurant_Reviews.tsv', delimiter = '\t', quoting = 3)